Microsoft is creating some very cool “Cognitive Services” that we as developers can easily utilize in our applications to accomplish some amazing results with a few simple calls to these Azure service.

Microsoft is creating some very cool “Cognitive Services” that we as developers can easily utilize in our applications to accomplish some amazing results with a few simple calls to these Azure service.

If you haven’t setup the Cognitive Services – take a look at Setting up Azure Cognitive Services.

We’ll start with the “Face [Detection] API”

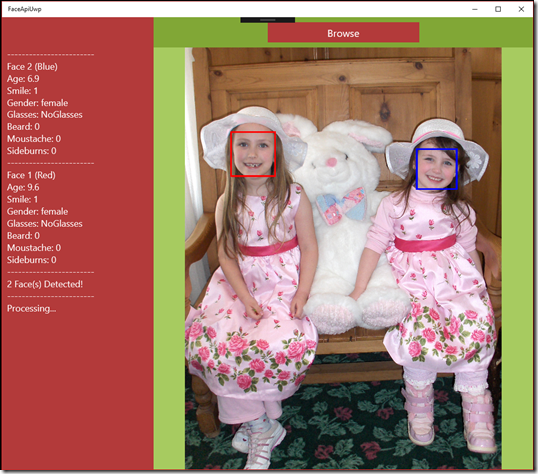

“Detect one or more human faces in an image and get back face rectangles for where in the image the faces are, along with face attributes which contain machine learning-based predictions of facial features. … The face attribute features available are: Age, Gender, Pose, Smile, and Facial Hair…”

As my eventual goal is to integrate these into Hololens for you guys – we’ll be doing this integration into UWP (Universal Windows Platform) – but starting with desktop apps.

Completed project is up on GitHub @ FaceApiUwp

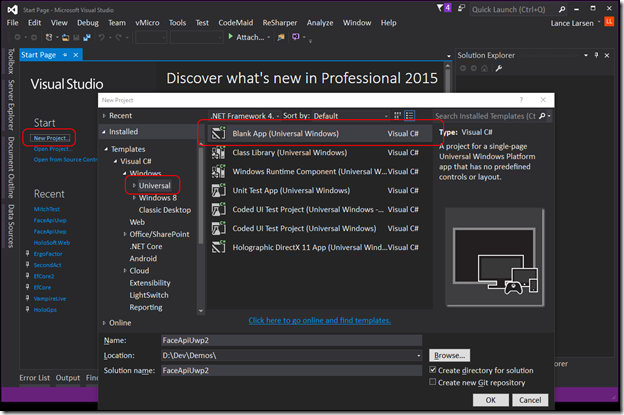

Ok, let’s dive right into code! Fire up Visual Studio…

1) “File” > “New” > “Project…”

2) “Templates” > “Visual C#” > “Windows” > “Universal” > “Blank App (Universal Windows)”

3) Naming the project “FaceApiUwp” — > Note have this code complete and up on GitHub @ FaceApiUwp

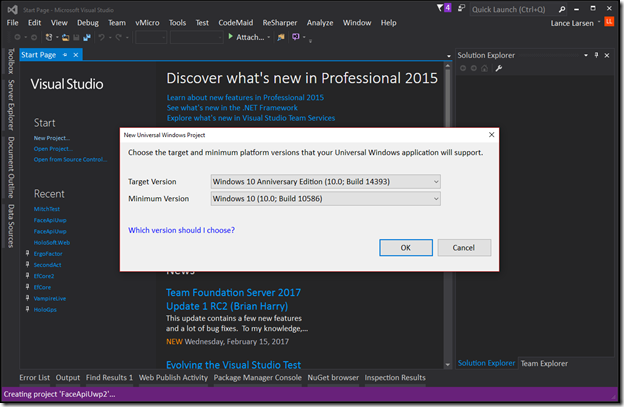

4) Click “OK” defaults should be good.

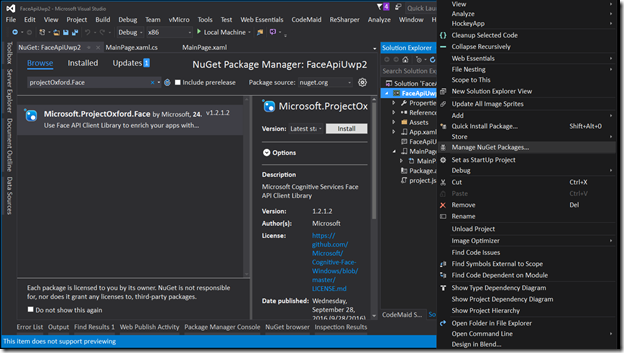

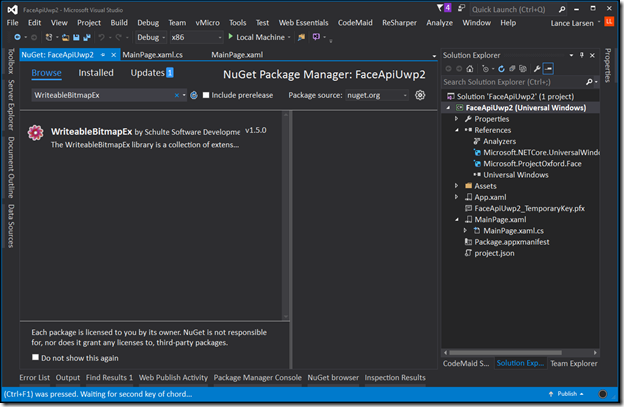

5) Right click on the Project > “Manage NuGet Packages”

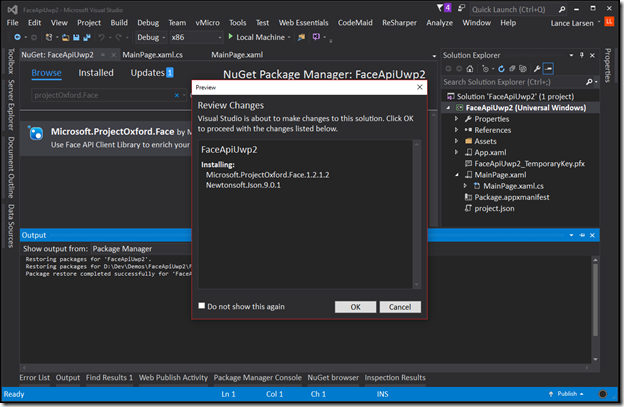

6) In the NuGet window > “Browse” > they “ProjectOxford.Face” > “Install”

7) One more NuGet package – we’ll be working with writeable bit maps for graphics… Problem is that even though they exist in WPF, no such thing exists in UWP… So we’ll be using “WriteableBitmapEx” to get that functionality in UWP – add that to your project.

8) Open up the MainPage.xaml and replace the “<Grid>” with the following…

[sourcecode language="csharp" padlinenumbers="true"]

<Grid>

<Grid.RowDefinitions>

<RowDefinition Height="Auto"/>

<RowDefinition Height="*"/>

</Grid.RowDefinitions>

<Grid.ColumnDefinitions>

<ColumnDefinition Width="Auto"/>

<ColumnDefinition Width="*"/>

</Grid.ColumnDefinitions>

<Rectangle Grid.Row="0" Grid.Column="0" Fill="#B33A3A" Width="300" />

<Rectangle Grid.Row="1" Grid.Column="0" Fill="#B33A3A" Width="300" />

<Rectangle Grid.Row="0" Grid.Column="1" Fill="#81A736" />

<Rectangle Grid.Row="1" Grid.Column="1" Fill="#A7CB60" />

<Button x:Name="buttonBrowse"

Margin="10" Height="40" Width="300"

HorizontalAlignment="Center"

Background="#B33A3A" FontSize="20" Foreground="White"

Content="Browse..."

Click="BrowseButton_Click" Grid.Row="0" Grid.Column="1"/>

<Image x:Name="imagePhoto" Stretch="Uniform" Grid.Row="1" Grid.Column="1"/>

<ScrollViewer Grid.Row="1" Grid.Column="0" >

<TextBlock x:Name="textResults" Margin="10,0,10,10" Width="280"

Foreground="White" FontSize="18" TextWrapping="WrapWholeWords"

ScrollViewer.HorizontalScrollBarVisibility="Disabled"

ScrollViewer.VerticalScrollBarVisibility="Auto"/>

</ScrollViewer>

</Grid>

[/sourcecode]

9) Then in MainPage.xaml.cs add…

NOTE – reference my Setting up Azure Cognitive Services to get your own Face API key

[sourcecode language="csharp"]

using System;

using System.Collections.Generic;

using System.IO;

using System.Threading.Tasks;

using Windows.Graphics.Imaging;

using Windows.Storage;

using Windows.Storage.Pickers;

using Windows.UI;

using Windows.UI.Xaml;

using Windows.UI.Xaml.Controls;

using Windows.UI.Xaml.Media.Imaging;

using Microsoft.ProjectOxford.Face;

using Microsoft.ProjectOxford.Face.Contract;

namespace FaceApiUwp2

{

public sealed partial class MainPage : Page

{

public MainPage()

{

this.InitializeComponent();

}

//----------------------------------------------------------------------

//--- Add your own Face API key -- see

// http://lancelarsen.com/setting-up-azure-cognitive-services/

//----------------------------------------------------------------------

private readonly IFaceServiceClient _faceServiceClient

= new FaceServiceClient("80baebfda7f0484394079f40be3b64e3");

private async void BrowseButton_Click(object sender, RoutedEventArgs e)

{

//----------------------------------------------------------------------

//--- Open File Dialog

//----------------------------------------------------------------------

var filePicker = new FileOpenPicker();

filePicker.ViewMode = PickerViewMode.Thumbnail;

filePicker.SuggestedStartLocation = PickerLocationId.PicturesLibrary;

filePicker.FileTypeFilter.Add(".jpg");

filePicker.FileTypeFilter.Add(".jpeg");

filePicker.FileTypeFilter.Add(".png");

var file = await filePicker.PickSingleFileAsync();

if (file == null || !file.IsAvailable) return;

AppendMessage("Processing...");

buttonBrowse.Content = "Processing...";

buttonBrowse.IsEnabled = false;

//----------------------------------------------------------------------

//--- Create a bitmap from the image file

//--- Using Nuget WriteableBitmapEx, as that does not exist in UWP

//--- https://writeablebitmapex.codeplex.com/

//----------------------------------------------------------------------

var property = await file.Properties.GetImagePropertiesAsync();

var bitmap = BitmapFactory.New((int)property.Width, (int)property.Height);

using (bitmap.GetBitmapContext())

{

using (var fileStream = await file.OpenAsync(FileAccessMode.Read))

{

bitmap = await BitmapFactory.New(1, 1).FromStream(fileStream,

BitmapPixelFormat.Bgra8);

}

}

//----------------------------------------------------------------------

//--- Use the Azure Cognitive Services Face API to Locate and Analyse Faces

//----------------------------------------------------------------------

using (var imageStream = await file.OpenStreamForReadAsync())

{

var faces = await DetectFaces(imageStream);

buttonBrowse.Content = "Browse";

buttonBrowse.IsEnabled = true;

if (faces == null) return;

var faceCount = 1;

AppendMessage("------------------------");

AppendMessage($"{faces.Length} Face(s) Detected!");

foreach (var face in faces)

{

string colorName;

var color = SetColor(faceCount, out colorName);

DrawRectangle(bitmap, face, color, 10);

AppendMessage("------------------------");

AppendMessage($"Sideburns: {face.FaceAttributes.FacialHair.Sideburns}");

AppendMessage($"Moustache: {face.FaceAttributes.FacialHair.Moustache}");

AppendMessage($"Beard: {face.FaceAttributes.FacialHair.Beard}");

AppendMessage($"Glasses: {face.FaceAttributes.Glasses}");

AppendMessage($"Gender: {face.FaceAttributes.Gender}");

AppendMessage($"Smile: {face.FaceAttributes.Smile}");

AppendMessage($"Age: {face.FaceAttributes.Age}");

AppendMessage($"Face {faceCount++} ({colorName})");

}

}

imagePhoto.Source = bitmap;

AppendMessage("------------------------");

}

private async Task<Face[]> DetectFaces(Stream imageStream)

{

var attributes = new List<FaceAttributeType>();

attributes.Add(FaceAttributeType.Age);

attributes.Add(FaceAttributeType.Gender);

attributes.Add(FaceAttributeType.Smile);

attributes.Add(FaceAttributeType.Glasses);

attributes.Add(FaceAttributeType.FacialHair);

Face[] faces = null;

try

{

faces = await _faceServiceClient.DetectAsync(imageStream, true, true, attributes);

}

catch (FaceAPIException exception)

{

AppendMessage("------------------------");

AppendMessage($"Face API Error = {exception.ErrorMessage}");

}

catch (Exception exception)

{

AppendMessage("------------------------");

AppendMessage($"Face API Error = {exception.Message}");

}

return faces;

}

private static Color SetColor(int faceCount, out string colorName)

{

Color color;

switch (faceCount)

{

case 1: color = Colors.Red; colorName = "Red"; break;

case 2: color = Colors.Blue; colorName = "Blue"; break;

case 3: color = Colors.Green; colorName = "Green"; break;

case 4: color = Colors.Yellow; colorName = "Yellow"; break;

case 5: color = Colors.Purple; colorName = "Purple"; break;

default: color = Colors.Orange; colorName = "Orange"; break;

}

return color;

}

private void AppendMessage(string message)

{

textResults.Text = $"{message}\r\n{textResults.Text}";

}

private static void DrawRectangle(WriteableBitmap bitmap, Face face,

Color color, int thinkness)

{

var left = face.FaceRectangle.Left;

var top = face.FaceRectangle.Top;

var width = face.FaceRectangle.Width;

var height = face.FaceRectangle.Height;

DrawRectangle(bitmap, left, top, width, height, color, thinkness);

}

private static void DrawRectangle(WriteableBitmap bitmap, int left,

int top, int width, int height, Color color, int thinkness)

{

var x1 = left;

var y1 = top;

var x2 = left + width;

var y2 = top + height;

bitmap.DrawRectangle(x1, y1, x2, y2, color);

for (var i = 0; i < thinkness; i++)

{

bitmap.DrawRectangle(x1--, y1--, x2++, y2++, color);

}

}

}

}

[/sourcecode]

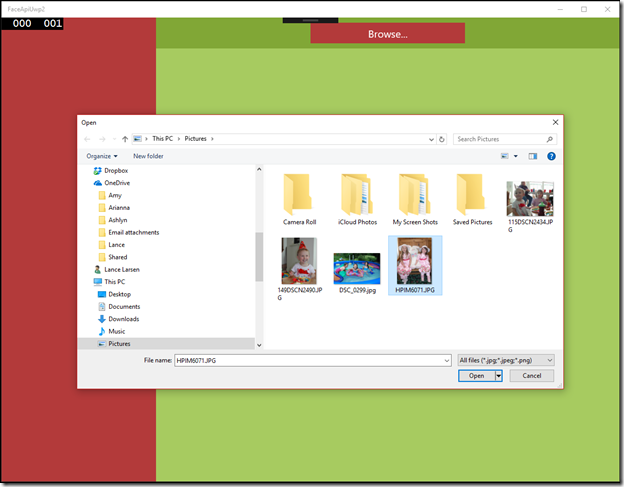

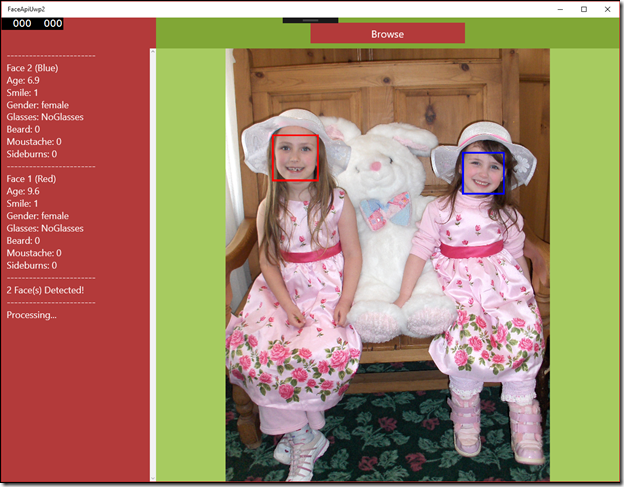

10) Run it… Click “Browse” > Select a picture with faces -or- one without and see what happens!

11) So now that we have it working – let’s touch base on a couple of the important methods.

We start by creating a FaceServiceClient and passing in our Face API key from the Azure Service.

[sourcecode language="csharp"]

//----------------------------------------------------------------------

//--- Add your own Face API key

//--- see http://lancelarsen.com/setting-up-azure-cognitive-services/

//----------------------------------------------------------------------

private readonly IFaceServiceClient _faceServiceClient

= new FaceServiceClient("80baebfda7f0484394079f40be3b64e3");

[/sourcecode]

12) Not focusing much on the WritableBitmap or other cool helper functions – let’s look at how we connect to the FaceAPI.

It’s really pretty straight forward once you see it being used – noting that we can add / remove FaceAttributeTypes when we connect with the API, the fewer there are – the faster it is… but it’s pretty fast so I have all the one’s we’re generally interested in for a basic example.

[sourcecode language="csharp"]

private async Task<Face[]> DetectFaces(Stream imageStream)

{

var attributes = new List<FaceAttributeType>();

attributes.Add(FaceAttributeType.Age);

attributes.Add(FaceAttributeType.Gender);

attributes.Add(FaceAttributeType.Smile);

attributes.Add(FaceAttributeType.Glasses);

attributes.Add(FaceAttributeType.FacialHair);

Face[] faces = null;

try

{

faces = await _faceServiceClient.DetectAsync(imageStream, true, true, attributes);

}

catch (FaceAPIException exception)

{

AppendMessage("------------------------");

AppendMessage($"Face API Error = {exception.ErrorMessage}");

}

catch (Exception exception)

{

AppendMessage("------------------------");

AppendMessage($"Face API Error = {exception.Message}");

}

return faces;

}

[/sourcecode]

Completed project is up on GitHub @ FaceApiUwp

Completed project is up on GitHub @ FaceApiUwp

You’ll see more in future examples! Stay tuned!

//Lance 🙂